News and Highlights

DEBS 2025: Call for Grand Challenge Solutions

The DEBS Grand Challenge is a series of competitions that started in 2010, in which participants from academia and industry compete with the goal of building faster and more scalable distributed and event-based systems that solve a practical problem. Every year, the DEBS Grand Challenge participants have a chance to explore a new data set and a new problem and can compare their results based on common evaluation criteria. The winners of the challenge are announced during the conference. Apart from correctness and performance, submitted solutions are also assessed along a set of non-functional requirements.

Registration is Open! https://cmt3.research.microsoft.com/DEBS2025/Track/3/Submission/Create

Topic: Defect Monitoring in Additive Manufacturing

The 2025 DEBS Grand Challenge focuses on real-time monitoring of defects in Laser Powder Bed Fusion (L-PBF) manufacturing.

L-PBF is an additive manufacturing method, meaning that it creates objects by adding material rather than removing parts from an initial block, which reduces waste and increases flexibility. Specifically, L-PBF melts layers of fine metal powder on top of each other, according to a specification (usually, a CAD file).

Image source: http://pencerw.com/feed/2014/12/4/island-scanning-and-the-effects-of-slm-scanning-strategies

Unfortunately, L-PBF is not immune to defects due to impurities in the material used or calibration errors. In particular, a high degree of porosity (amount of empty spaces left inside the material) may severely impact the durability of the object being created.

The established approach to assess the quality of the process is through the analysis of the object after it is fully created. However, this approach leads to a waste of time and material, as the analysis is carried out afterward. To overcome these problems, more recent studies propose techniques that monitor the creation process in real-time, blocking a printing process if there is a high probability of defects.

The goal of the 2025 DEBS Grand Challenge is to implement a real-time monitoring system to detect defects in L-PBF manufacturing. The system receives as input a stream of optical tomography images, indicating the temperature of the powder bed for each layer during the manufacturing process. It must compute the probability that the object being created presents defects in some areas, enabling rapid reactions in the case of problems.

Participation

Participation in the DEBS 2025 Grand Challenge consists of three steps: (1) registration, (2) iterative solution submission, and (3) paper submission.

The first step is to register your submission in the "grand challenge track”. Shortly after your registration, you will have access to a containerized environment to test your solution directly on your computer, and a sample naïve solution to compare your approach with.

Once your solution is ready, you can submit it to the evaluation platform (https://challenge2025.debs.org/) to get benchmarked in the challenge. The evaluation platform provides detailed feedback on performance and allows the solution to be updated in an iterative process. A solution can be continuously improved until the challenge closing date. Evaluation results of the last submitted solution will be used for the final performance ranking.

The last step is to upload a short paper (maximum 6 pages) describing the final solution via the conference management tool. The DEBS Grand Challenge Committee will review all papers to assess the merit and originality of submitted solutions. Participants will have the opportunity to present their work during the poster session at the DEBS 2025 conference.

Query

The 2025 DEBS Grand Challenge involves implementing a monitoring system that takes in input a stream of optical tomography images of an object being created, and outputs a prediction that the object presents defects in some areas.

Each optical tomography image contains, for each point P = (x, y), the temperature T(P) at that point, measured layer by layer (z).

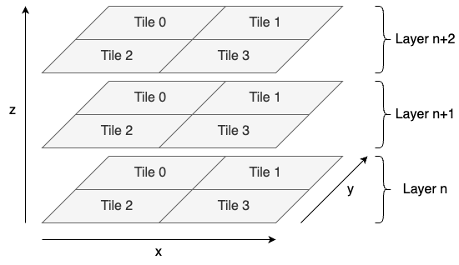

Images are submitted as a continuous stream, simulating the monitoring of the object while it is being printed. The stream delivers images one layer at a time, and each layer is split into multiple blocks, which we call tiles. See the figure below for reference.

The processing pipeline involves 4 steps:

- Saturation analysis. Within each tile, detect all points that surpass a threshold value of 65000.

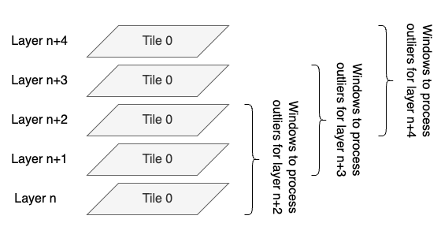

- Windowing. For each tile, keep a window of the last three layers.

- Outlier analysis. Within each tile window, for each point P of the most recent layer, compute its local temperature deviation as difference between the mean temperature T(P) of its close neighbors (Manhattan distance 0 <= d <= 2 considering the 3 layers stacked on top of each other) and that of its outer neighbours (Manhattan distance 2 < d <=4) in absolute value. Let us call this value D. We define an outlier as a point for which D > 5000.

- Outlier clustering. Using the outliers computed for the last received layer, find clusters of nearby outliers using DBScan, considering the Euclidean distance between points as the distance metric.

For each input (tile) received from the stream, your solution should return:

- The number of saturated points.

- The centroid (x, y coordinates) and size (number of points) of the top 10 largest clusters.

The figure below illustrates how windows are computed.

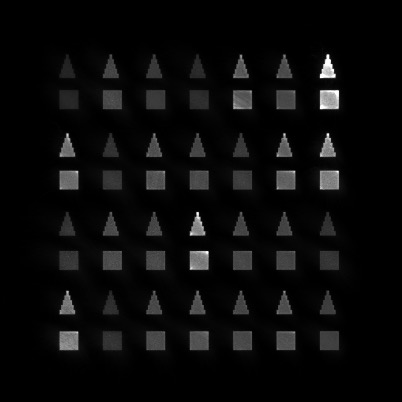

As a concrete example of the process, the image below shows an image extracted from the input dataset.

The analysis of saturation identifies over 5000 saturated points, corresponding to white areas (e.g., the area in the top right triangle).

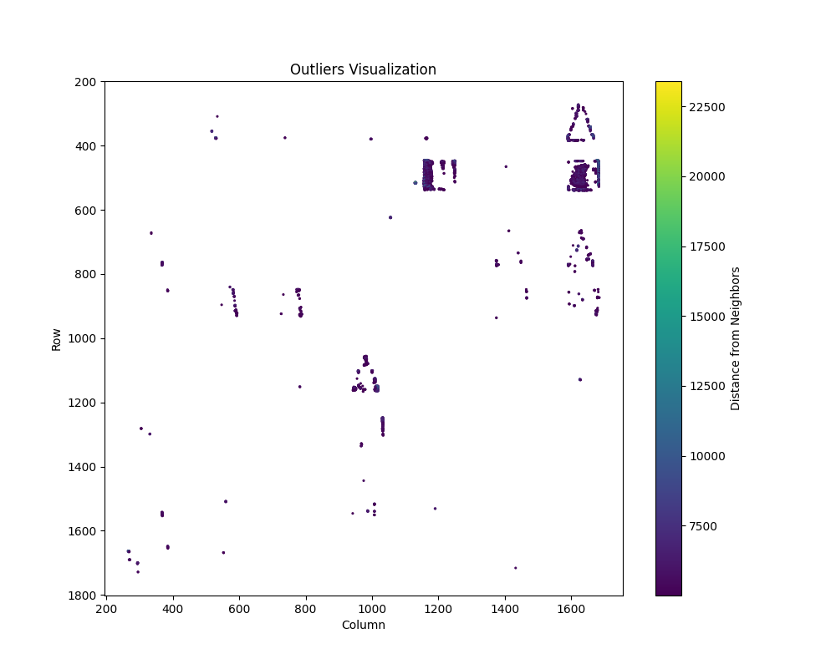

The analysis of outliers identifies the points as shown in the figure below.

Finally, the cluster analysis identifies the following clusters:

- Cluster 6: Centroid (498.1222272521503, 1633.0054323223178), Size 2209

- Cluster 5: Centroid (492.52658371040724, 1168.7143665158371), Size 1768

- Cluster 0: Centroid (343.49514563106794, 1624.8543689320388), Size 309

- Cluster 30: Centroid (1147.0236966824646, 1011.8388625592418), Size 211

- Cluster 7: Centroid (463.44827586206895, 1247.603448275862), Size 174

- Cluster 29: Centroid (1148.624060150376, 953.9774436090225), Size 133

- Cluster 32: Centroid (1267.4313725490197, 1032.4705882352941), Size 102

- Cluster 28: Centroid (1070.6382978723404, 981.3829787234042), Size 94

- Cluster 8: Centroid (458.10752688172045, 1212.6989247311828), Size 93

- Cluster 19: Centroid (893.3134328358209, 586.9701492537314), Size 67

Challenger 2.0 and Suggested Workflow

We have been using Challenger to disseminate the dataset and benchmark solutions [1, 2, 3]. This year, we introduce Challenger 2.0, which switches to REST API instead of RPC calls. Furthermore, it uses a containerized evaluation infrastructure [4]. Challenger provides a REST API, which can accessed at http://challenge2025.debs.org:52923/api. The OpenAPI specification of the REST API is explained below. The API provides 5 endpoints to benchmark your solutions.

- /create (Creates a new benchmark)

- /start (Starts the benchmark)

- /next_batch (Get the next batch of data)

- /result (Submit results, go to Step 2, repeat until there is no more data)

- /end (End the benchmark)

Containerized evaluation infrastructure

To foster the culture of cloud-native solutions in the community and ensure the reproducibility of the solutions, we provide a Kubernetes-cluster-based evaluation infrastructure. Please containerize your solution and develop a Kubernetes job workload for solution deployment. Please upload the job YAML file to Challenger so that your solutions can be deployed on the cluster. Please run your final evaluations on Challenger infrastructure. Challenger utilizes namespaces to sandbox solutions. Furthermore, it uses ResourceQuotas and LimitRanges to provide uniform resources to each namespace to ensure a fair evaluation. Each namespace is assigned 4 CPU cores and 8 GBs of memory. You can distribute these resources among pods. By default, each container is given 1 CPU core and 2 GBs of memory.

Fault-tolerance evaluations

Faults are a common occurrence in any infrastructure, and faults may drastically reduce the performance of a solution [5]. A practical solution should not only be performant but also fault-tolerant. Challenger provides fault-tolerance evaluations to measure the solutions' performance under failure. Challenger can simulate processes (pods) and network failures. Pods failures make a process unavailable, and network failures can introduce network delays in the network of the solution. Please read Section 3.3 of [5] on the discussion to choose these failures. Please run your solutions against both faults with varying frequencies and report your results.

Micro Challenger

To accelerate the speed of local development and remove network bandwidth constraints, we additionally provide Micro Challenger to run on your own systems. Micro Challenger uses the same endpoints as Challenger and is cross-compatible. In Micro Challenger, participants can visualize the statistics of their benchmarks (throughput and latency percentiles) through a Web interface through the history endpoint.

REST Endpoints

Here is a detailed description of the available endpoints:

- POST /api/create

Description: Creates a new "bench" or task with a set of parameters.

Input JSON:

{

"user_id": "id_of_user",

"name": "name_of_bench",

"test": true,

"queries": [0],

"max_batches": <limit>

}Where

- user_id: Identifier for the user.

- name: The name of the bench.

- test: A boolean to specify if this is a test (test runs will not be considered in the online platform)

- queries: A list of queries to be executed (always [0] for this year's challenge)

- max_batches: The maximum number of batches to process (optional).

Response: Returns the bench_id of the created task.

- POST /api/start/{bench_id}

Description: Starts the execution of the bench identified by bench_id.

URL Parameter:

- bench_id: The identifier of the bench to be started.

Response: Returns status code 200 on successful start.

- GET /api/next_batch/{bench_id}

Description: Retrieves the next batch of data for the specified bench.

URL Parameter:

- bench_id: The identifier of the bench.

Response:

- On success: Returns a batch of data encoded in MessagePack (https://msgpack.org/).

- On completion: Returns status code 404 when there are no more batches available.

- POST /api/result/0/{bench_id}/{batch_number}

Description: Submits the result of processing a batch.

URL Parameters:

- bench_id: The identifier of the bench.

- bench_number: The number of the batch being submitted.

Data: The processed result serialized in MessagePack (https://msgpack.org/).

Response: Returns status code 200 on successful start.

- POST /api/end/{bench_id}

Description: Marks the bench as completed.

URL Parameter:

- bench_id: The identifier of the bench.

Response: Returns the final result as a string upon successful completion.

Batch Input. Each batch contains data such as:

{

"sequence": <int>,

"print_id": <int>,

"tile_id": <int>,

"layer": <int>,

"tif": <binary data>

}Where:

- sequence: sequence number that uniquely identifies a batch;

- print_id: identifier of the object being printed;

- tile_id: identifier of the tile (see the figure above for reference);

- layer: identifier of the layer (z position) of the tile (see the figure above for reference);

- tif: the image in tif format.

Response. Each response contains data such as:

{

"sequence": <int>,

“query”: 0

"print_id": <int>,

"tile_id": <int>,

"saturated": <int>,

"centroids": [C0, C1, …]

}Where:

- sequence: sequence number that uniquely identifies a batch;

- print_id: identifier of the object being printed;

- tile_id: identifier of the tile;

- saturated: number of saturated points;

- centroids: list of centroids, where each centroid is defined by its coordinates (x, y) and its size (the number of points belonging to its cluster)

Awards and Selection Process

Participants of the challenge compete for the performance award. The winner is determined through the automated evaluation platform Challenger (see above), according to criteria of performance (throughput and latency) and fault tolerance. In the case of submissions with similar performance characteristics, the committees will evaluate qualities of the solutions that are not tied to performance. Specifically, participants are encouraged to consider the practicability of the solution.

Regarding the practicability of a solution, we encourage participants to push beyond solving a problem correctly only in the functional sense. Instead, the aim is to provide a reusable and extensible solution that is of value beyond this year’s Grand Challenge alone, using available software systems or home-made but general-purpose processing engines.

In particular, we will reward those solutions that adhere to a list of non-functional criteria, such as configurability, scalability (horizontal scalability is preferred), operational reliability/resilience, accessibility of the solution's source code, integration with standard (tools/protocols), documentation, security mechanisms, deployment support, portability/maintainability, and support of special hardware (e.g., FPGAs, GPUs, SDNs, ...). These criteria are driven by industry use cases and scenarios so that we make sure solutions are applicable in practical settings.

References:

- The DEBS 2021 grand challenge: analyzing environmental impact of worldwide lockdowns. Tahir et al. DEBS ‘21. https://doi.org/10.1145/3465480.3467836

- Detecting trading trends in financial tick data: the DEBS 2022 grand challenge. Frischbier et al. DEBS ‘22. https://doi.org/10.1145/3524860.3539645

- The DEBS 2024 Grand Challenge: Telemetry Data for Hard Drive Failure Prediction. De Martini et al. DEBS ‘24. https://doi.org/10.1145/3629104.3672538

- Challenger 2.0: A Step Towards Automated Deployments and Resilient Solutions for the DEBS Grand Challenge. Tahir et al. DEBS ‘24. https://doi.org/10.1145/3629104.3666027

- How Reliable Are Streams? End-to-End Processing-Guarantee Validation and Performance Benchmarking of Stream Processing Systems. Tahir et al. PVLDB ‘24. https://doi.org/10.14778/3712221.3712227

Important Dates

Grand Challenge Co-Chairs

ACM Policies and Procedures

By submitting your article to an ACM Publication, you are hereby acknowledging that you and your co-authors are subject to all ACM Publications Policies, including ACM's Publications Policy on Research Involving Human Participants and Subjects. ACM will investigate alleged violations of this policy or any ACM Publications Policy and may result in a full retraction of your paper, in addition to other potential penalties, as per ACM Publications Policy.

Regarding ACM Policy on Authorship, which also provides policies on the use of generative AI tools in preparing manuscripts, please check the frequently asked questions page.

Please ensure that you and your co-authors obtain an ORCID ID, so you can complete the publishing process for your accepted paper.

Important Dates

| Events | Dates (AoE) |

|---|---|

| Research Papers | |

| Abstract Submission | |

| Paper Submission | |

| Rebuttal (start) | March 21st, 2025 |

| Rebuttal (end) | March 28th, 2025 |

| Notification | |

| Camera Ready | |

| Submission Dates | |

| Industry and Application Papers | |

| Tutorials and Workshops | |

| Posters and Demos | |

| Grand Challenge Short Paper | |

| Doctoral Symposium | |

| Notification Dates | |

| Tutorials and Workshops | |

| Industry and Application Papers | |

| Posters and Demos | |

| Doctoral Symposium | |

| Camera Ready | |

| Industry and Application Papers | |

| Posters and Demos | |

| Tutorials and Workshops | |

| Grand Challenge | |

| Grand Challenge Platform | |

| Registration | December, 2024 |

| Platform Opens | February 15th, 2025 |

| Platform Closes | May 9th, 2025 |

| Conference | |

| Conference | June 10th–13th 2025 |